Learn to use Artificial Intelligence in your Ph.D. studies without risk

The advent of Artificial Intelligence offers enormous opportunities for innovation and improvement in various domains and tasks, but it is also true that it requires careful consideration of the risks that may be incurred.

When asked: Is it okay to use Artificial Intelligence in your Ph.D. research? The answer is: Absolutely! By taking the tool very seriously and using it in a responsible and acceptable manner.

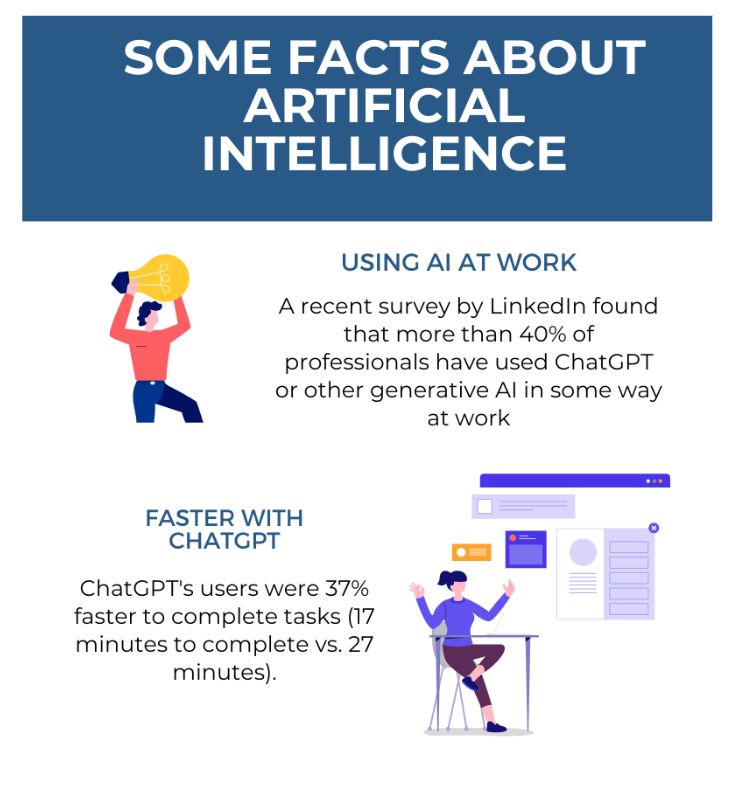

A recent survey by LinkedIn found that more than 40% of professionals have used ChatGPT or other generative AI in some way at work. However, 68% admit that they are using it without their boss's knowledge. This suggests a level of uncertainty about how companies might perceive the use of such technology in the workplace.

Definitely, tools like ChatGPT, Bard, and Midjourney, can improve productivity, and even contribute to creativity, by automating tasks such as writing texts, creating graphics, composing emails, creating slideshows, and much more.

A survey carried out showed that “ChatGPT users were 37% faster to complete tasks (17 minutes to complete vs. 27 minutes) with more or less similar ratings (quality level), and as ChatGPT users repeated their tasks, the quality of their work increased significantly faster.”

“A new study from Stanford and MIT researchers found that using a GAI-based assistant increased productivity by 14% on average, with the greatest impact on low-skilled and novice workers who were able to complete work 35% faster with the help of the tool. They also found that AI support improved customer sentiment, reduced requests for managerial intervention, and improved employee retention.”

PRIVACY: It is important to be aware of the data that is shared when creating prompts. Valuable numbers for the company or even personal data. A real case is that of the Samsung company, where employees leaked confidential company information to ChatGPT when they tried to find a solution for the source code and faulty equipment and when they asked the chatbot to generate meeting minutes.

From the same previous point, we see how SECURITY can also be violated, by filtering relevant data that remains at the mercy of cybernetic hackers.

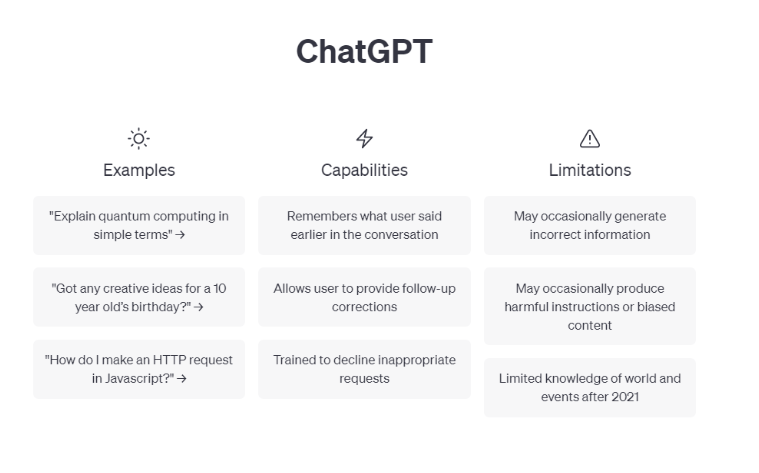

VERACITY. When using Artificial Intelligence tools it is vital to validate the information. Systems can "hallucinate" or makeup information.

And of course, ETHICS, which can be affected by using this information indiscriminately without taking minimum verification measures and without respecting copyright.

We are living in a time of great advances, of which we must take advantage in a conscious and responsible way in order to optimize and improve our work, always contributing and generating value to research, using AI as a guide and not as a solution to our projects.